If you use EFS with default settings, your AWS webserver will fail 4-6 weeks after putting it into production. Just when you think everything is fine after running for a few weeks with no incidents … alarms going off, sites down, IOWAIT up?

Check the AWS disk status. The EFS console has a monitoring page which gives the deceptive appearance of being useful but actually omits the most important metrics, so don’t look there, go over to CloudWatch, EFS.

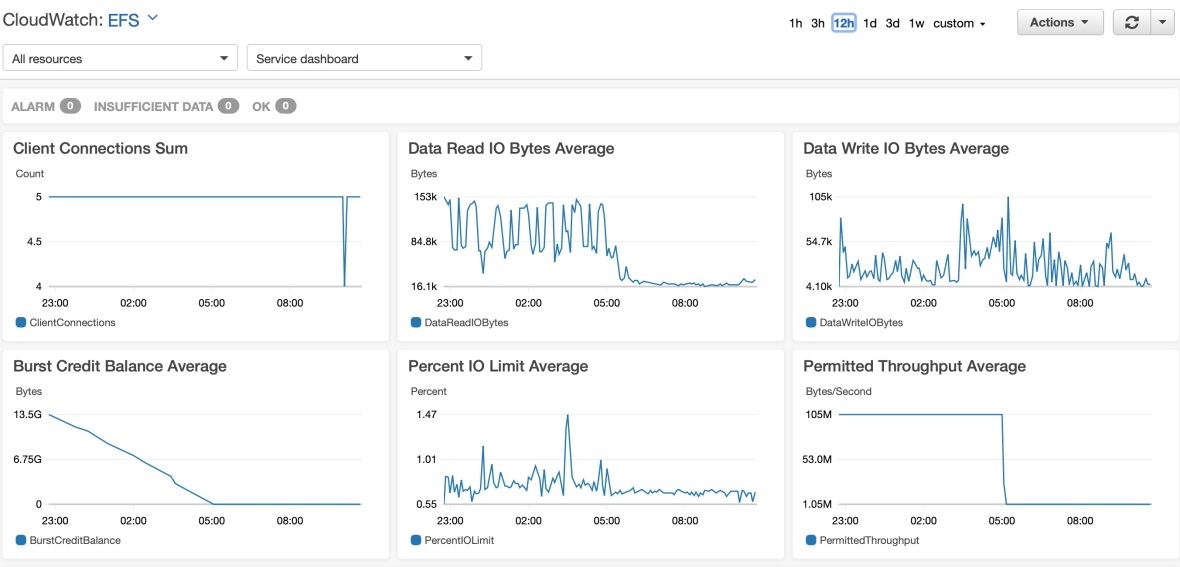

A pattern like this shows Burst Credit Balance dropping to zero, after which permitted throughput is immediately reduced, from 105Mb/s to 1.05Mb/s in this case:

According to the table in amazon’s documentation at: https://docs.aws.amazon.com/efs/latest/ug/performance.html

a 10GB EFS disk can only drive up to 0.5 MiB/s continuously. MiB/s is bits not bytes so that’s approx 64KB/s or 0.06MB/s.

As that’s too low to run normal webserver activity, Burst Credit Balance is gradually reduced to zero, triggering failure.

So how to resolve?

- Set to Provisioned throughput at as high a level as currently needed

- Review and reduce disk-intensive operations:

- Monitor throughput needed after reducing disk operations

- Reduce Provisioned throughput or switch back to Bursting throughput depending on the IO needed. Provisioned throughput was introduced in July 2018 – before that the solution was to increase the EFS disk size purely to increase the throughput entitlement, which can also work, but whether by increasing space or provisioned throughput, either way you pay!

File systems running in Provisioned Throughput mode still earn burst credits so the Provisioned throughput is a hard minimum rather than a hard maximum.

After offloading all static file access to CloudFront/S3 and turning off all un-necessary disk access and ensuring the EFS disk only holds website files and no logs etc, the EFS metrics on a 11GB active webserver disk were still showing 150k DataReadIOBytes (though confusingly only 5K TotalIOBytes) exceeding baseline throughput limit of around 60KB/s, and therefore continuous use of burst capacity which will run out and fail again after about a month of website operation, so there is no way to manage this on EFS without increasing the throughput. Provisioning maximum throughput is expensive but a minimum 1Mib/s is $6.60/month and is additional to the baseline throughput, so if it was 0.5MiB/s before the minimum provisioned should raise it to 1.5MiB/s. According to the documentation this is also burstable, however real-world experience suggests it is not, performance remains horrible if the bandwidth is set too low.

For detail on this see the Amazon paper on EFS Choosing Between the Different Throughput & Performance Modes.

Finally however, even though Burst Credit Balance is available, the update speed on eg an 11GB EFS disk is painfully painfully slow, slow to the level of causing updates to appear to fail due to web requests taking longer than expected. Internet discussions suggest the current solution is still to create large dummy files on EFS to artificially increase the size of the EFS disk allocation to increase the throughput limits, however this violates the principle of only paying for what you use.

One thought on “How to destroy your website with EFS”